ViseJoint virtual encoders

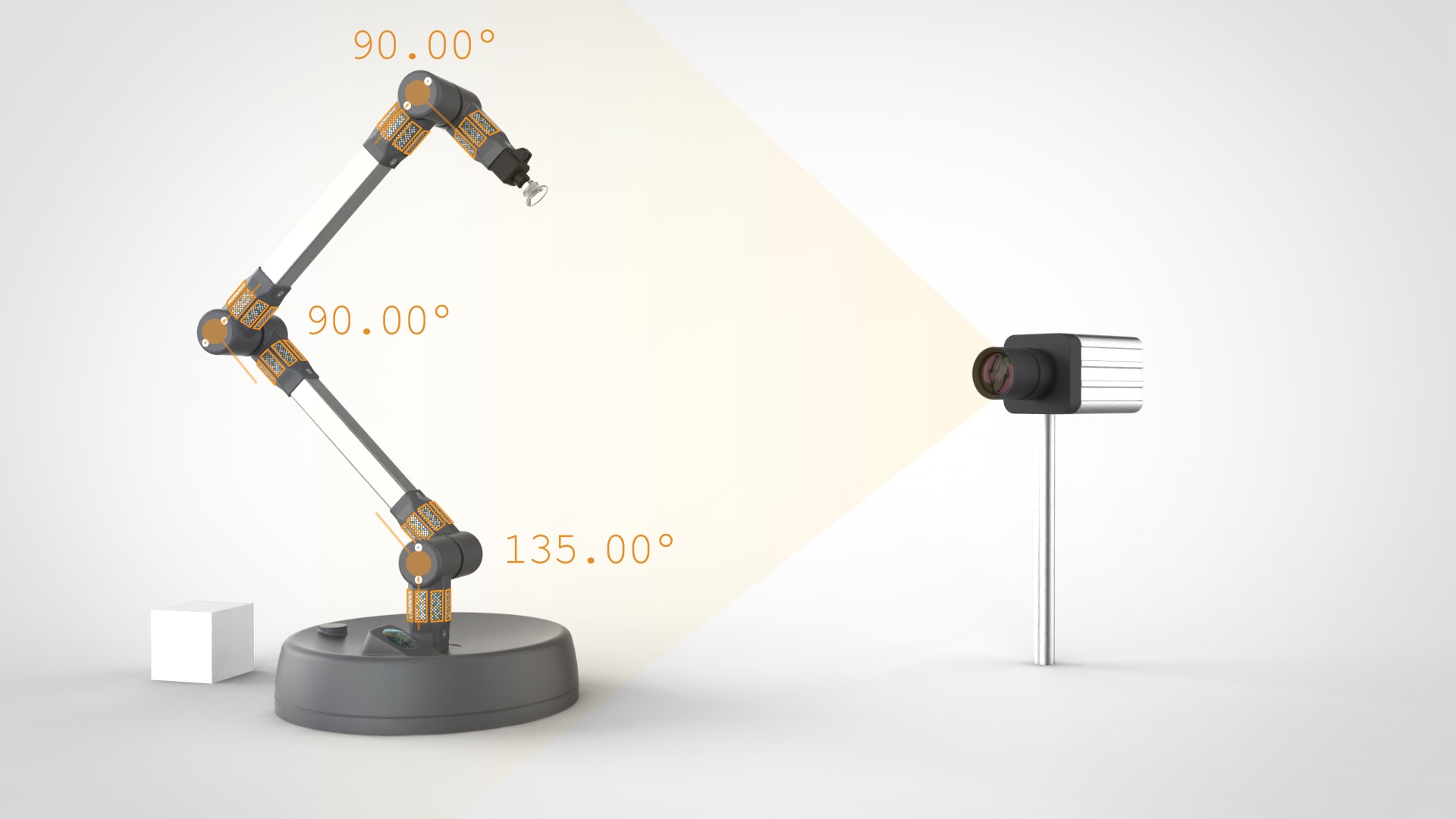

Explainer video: See how camera-based encoders work to build sensorless robot arms

Watch video

Prototype/reference design of a small arm with camera-based encoders for low-cost and home robots

Video of prototype

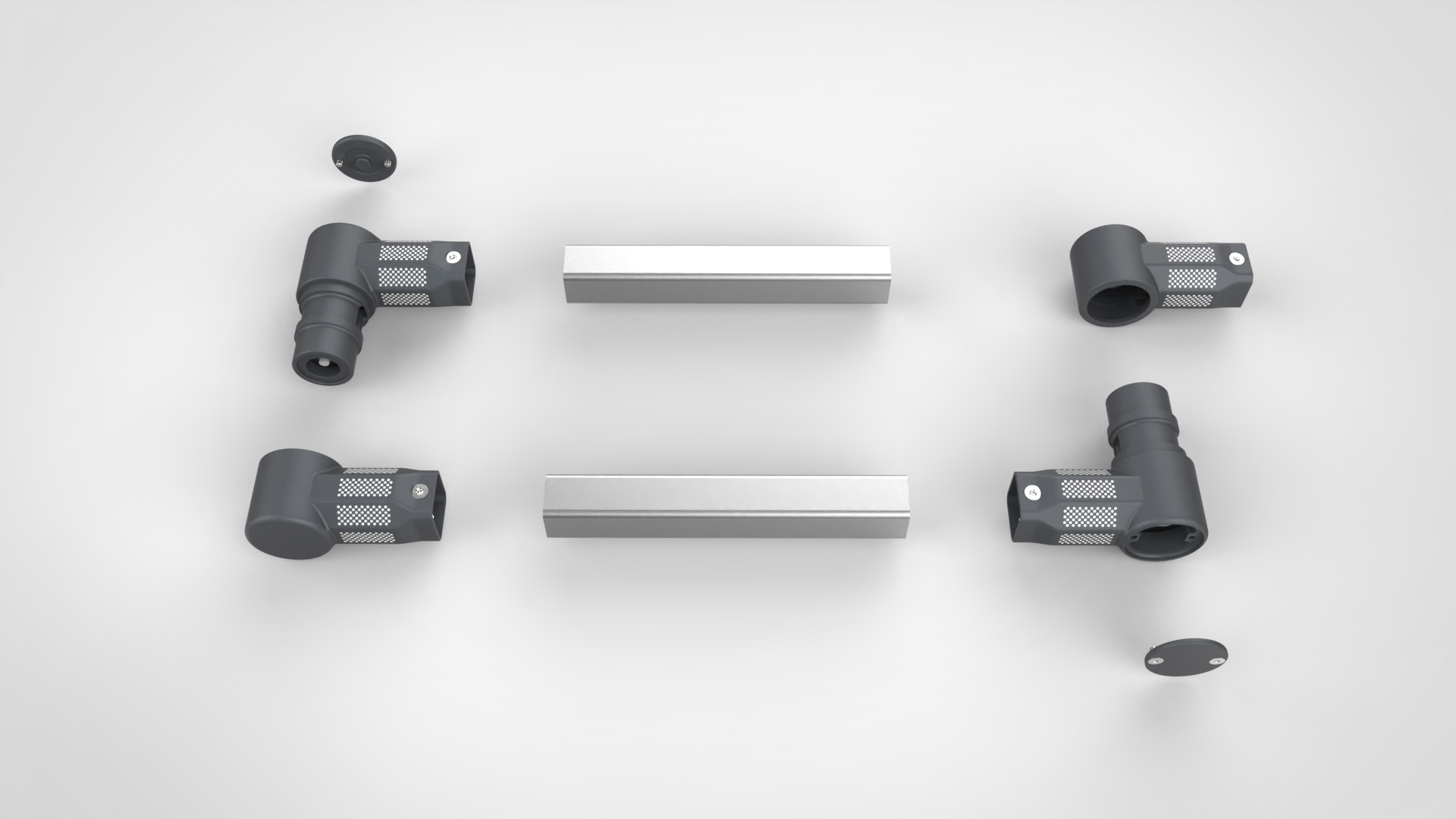

Prototype/reference design of a desktop arm with camera-based encoders using modular Igus-D components

Modular design with simplified hardware for quick adaptation and robust, low-cost robot arms

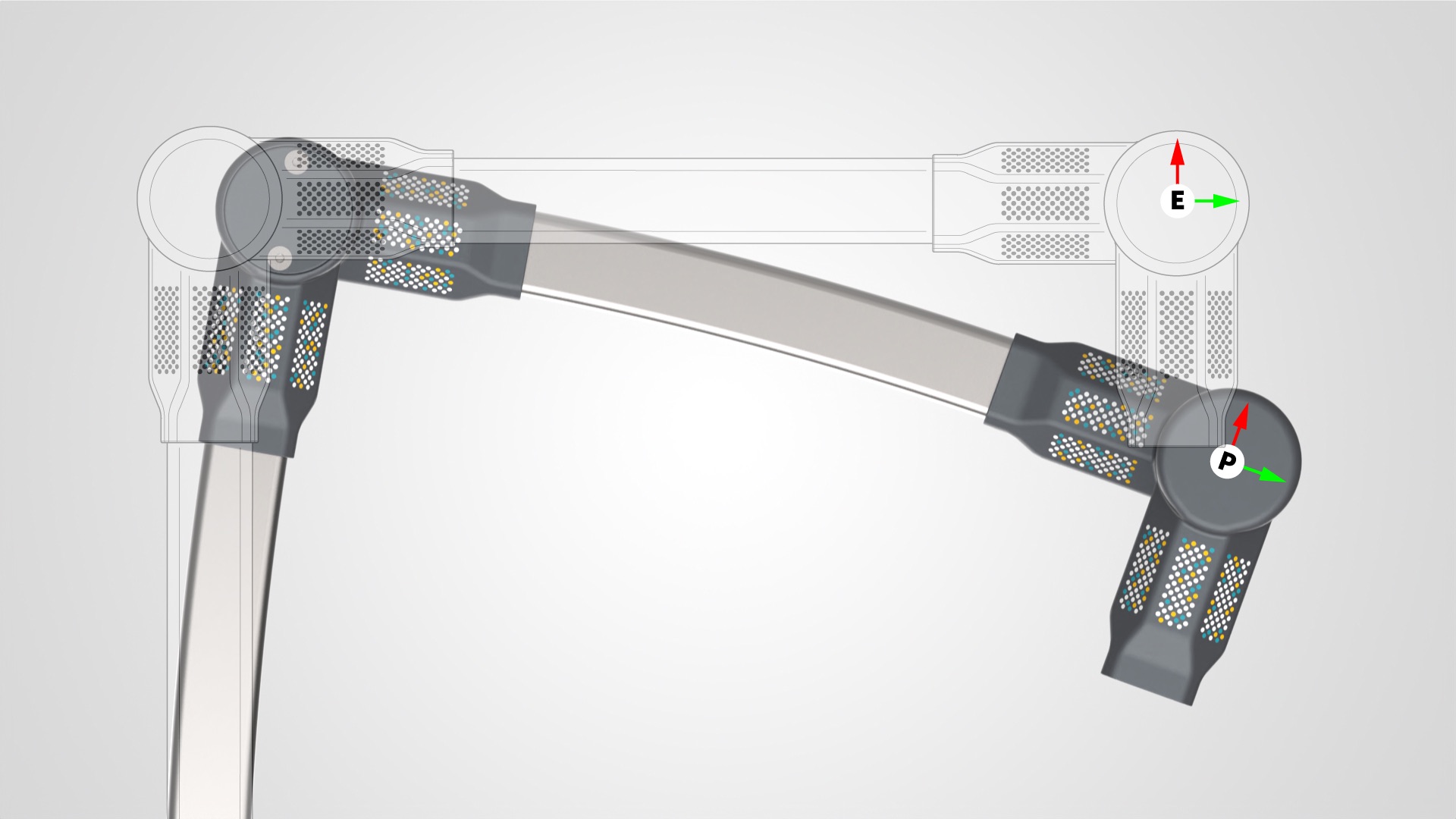

Camera-based encoders provide the true position (P) with gear backlash and deflection of links, instead of ideal positions (E)